Amica

Full-stack meet-up coordinator with a multi-agent workflow

Summary

Like many people, I experienced the euphoria of quickly spinning up a fully functional app in a couple of hours by prompting vibe-coding agents. In my three-week AI Product Bootcamp, I built and tested three AI product prototypes. Most of the time was spent specc'ing and refining; the building time was incredibly short. Yet I found myself increasingly frustrated for not understanding the code. So I decided to take the long road, spending two weeks pair programming with Claude Code, to work on Amica, a full-stack meet-up coordinator with a multi-agent workflow. The app itself is simple. What I learn building it is not.

Why did I build this?

I hit a wall after building three AI prototypes with Replit agent: I could ship fast, but I couldn't debug intelligently. I delegated the build to Replit agent and trusted its implementation plan without questioning. When Replit's autonomous agent got stuck in loops, I had no mental model to understand the underlying issues or prompt it to try different approaches.

I wondered if understanding implementation details, such as infrastructure, databases, APIs, and deployment, would make me better at AI product decisions. What if I tried to deliberately slow down the process, to understand what's going on under the hood, rather than accepting suggestions blindly?

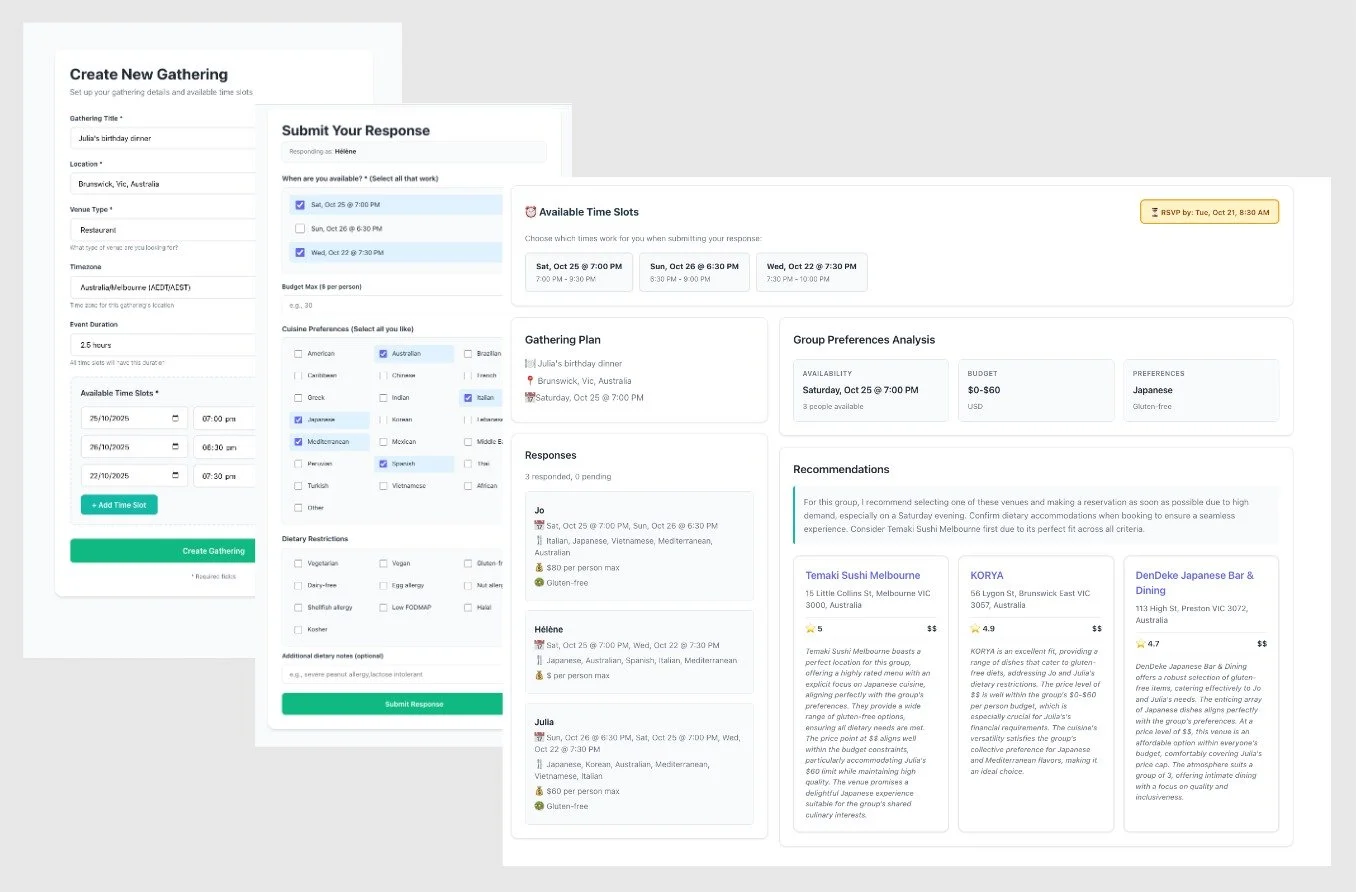

Some early mockups of Amica, the gathering coordination agent workflow, when I was still considering Replit as coding agent

I also wanted to explore agentic AI design patterns, to expand on LLM's capabilities and make them ever more useful. Amica was the perfect learning vehicle because it offered:

Real use case: organising meetups for a group with conflicting preferences is a challenge most of us have experience with

Multi-user coordination: group settings require async database state management

Agent workflow: preference analysis -> venue search -> recommendation

Tool use: integrate with Google Places API for venue search

Full-stack complexity: building an app that requires both front-end and backend forces understanding of how components integrate and interact with each other.

What I actually built

Amica offers a relatively simple workflow:

An authenticated user can create a gathering by specifying the location, venue type, duration, and a few timeslots for friends to choose from

After a gathering is created, the organiser can share its link where people can submit responses to select available time slots, specify any cuisine preferences and dietary requirements

Once there are enough responses, the organiser can trigger the agent workflow to find consensus in the group, search for venues and suggest the 3 best options for users to book

Try it yourself

Live demo: amica-rho.vercel.app

GitHub repo: github.com/nhungineer/amica

Note: This is a learning project, not production-ready. You may encounter bugs!

Project timeline

Day 1: Project scoping and schema design

Day 2: Finalise schema, generate database client and build API endpoints

Day 3: Write Google Places API services, and wire up agent logic

Day 4: Test backend code with curl commands, and code review

Day 5: Start frontend work setup with the main app, connect to the backend

Day 6: Build out the create gathering form and submit the response form, result view

Day 7: Production deployment with seed users

Day 8: Add an authentication layer with passwordless login

Day 9: UI polish, dynamic timeslots, expand venue types

Day 10: Improve venue search, enhance agent logic and add Google Maps link to recommended venues

Reflections

Learning by going slow

I could've gotten Replit agent to whip this app up in 1-2 days, but it took me ~2 weeks to get this done pair programming with Claude Code. I would read through every single line of code and not move on to the next piece of work until I understood it well enough. Instead of having Claude Code install the packages, I asked it to walk me through the commands, explain them, and I'll do it, and ask questions when I run into errors. To overcome Claude Code's default mode of asking to do the work itself, I included these instructions in the CLAUDE.md file for the project so that it's aware of my objective and learning preferences.

For the actual code, Claude Code showed me the code changes, I read through them, ask questions, and then tried to create or update the file myself. I asked Claude Code to confirm my understanding of the code by giving me 3-5 questions. Some of them were really hard. I may have misunderstood a concept, but the best thing about this is that if I got it wrong, Claude Code would correct me and point out where the misunderstanding was. We went back and forth five rounds for me to get the concept of Promise() in JavaScript, and why it was important, but when it clicked, it was such a great aha moment.

--------------------------------------------------------------------

**Example: Claude Code's systematic questions to build my mental model**

--------------------------------------------------------------------

1. Schemas (src/agents/schemas.ts)

- What structure does each agent return?

- Why Zod instead of just TypeScript types?

2. Database layer (src/db.ts, prisma/schema.prisma)

- How does Prisma Client work?

- What's the gathering lifecycle?

3. Services (src/services/google-places.ts)

- How do we call external APIs?

- Why two API calls (geocode + places)?

4. Agents (preference → venue)

- How does generateObject() work?

- How do agents pass data to each other?

5. Orchestrator (src/agents/orchestrator.ts)

- How does it coordinate everything?

- What happens on errors?

6. API layer (src/routes/*, src/index.ts)

- How do routes connect to orchestrator?

- How does Express routing work? It can be painful, tiring at times, too. I felt like burning through tokens; I felt incapable: "Am I just wasting time trying to understand the technical details?". I remember being stunned that I struggled when I couldn't comprehend React, whereas backend code and database concepts seemed much more straightforward and, dare I say, intuitive! By now, I know the drill of walking away when hitting a wall, then trying again later. I spent half a day getting Claude Code to help me understand callback functions, props, the parent-child relationship and basic JavaScript syntax and concepts, until I could point out which is which and trace a function in the files.

--------------------------------------------------------------------

**Example: When I finally understood callback functions!**

--------------------------------------------------------------------

Questions for You:

1. What is the callback function in this case? (What's it called in App.tsx?)

2. Notice handleResponseSubmitted has no parameters - it's () => { ... }. Why do you think that is?

(Compare to handleGatheringCreated which takes gatheringId)

3. When SubmitResponse calls onResponseSubmitted() on line 79, what code executes?

Take a moment to trace through this one yourself using what you've learned!

> 1. Callback function is this case is handleResponseSubmitted.

2. I'm not so sure why handleResponseSubmitted parameter is empty. We don't need the responseID back in this case because the data

we're interested in is the gathering and all responses related to that gathering

3. When SubmitResponse calls onResponseSubmitted() on line 79, it executes the handleResponseSubmitted function defined in App.tsx, line 93-99

⏺ Excellent! All three answers are correct! 🎉Two weeks in, I still can't build an app from scratch without assistance. I don't have all the concepts fully absorbed - there are days I worry I'm just 0.5cm below the surface, with enough context to be dangerous but not enough to be effective.

But here's what changed: I went from accepting "the build failed" as a mysterious black box to investigating which TypeScript error, which environment variable was missing, and why the database migration failed. I went from nodding along when engineers said "we need a migration" to understanding when schema changes require them and when they don't.

The goal wasn't to become an engineer in 2 weeks. It was to build enough mental models to make better product decisions, spot when implementation doesn't match intent, and have technical conversations without just deferring to "whatever the engineer thinks."

On making technical decisions

Working closely with Claude Code on implementation forced me to think through technical trade-offs, not just accept suggestions:

Data modelling: Claude Code initially suggested storing timeslots as free-text for flexibility. I pushed back - this would make it impossible to do time-based logic (like calculating RSVP deadlines). We used DateTime instead, which enabled features like "set RSVP deadline to 24 hours before the earliest timeslot."

UX → implementation complexity: I noticed that configuring start/end times for each gathering was tedious. I suggested switching to duration instead (e.g., "2 hours" rather than separate start/end inputs). This seemed like a simple UX improvement, but it required updating the form validation, changing the date/time calculation logic before sending data to the backend, and modifying user-facing labels. A one-line UX change touched multiple frontend components.

Testing vs production patterns: During testing, we used hardcoded UUIDs. When Claude Code suggested adding a UUID input field to the frontend, I recognised this as throwaway test code. Instead of bolting on a field that users would never need, I insisted we build the proper flow (CreateGathering form → generate UUID → route to gathering page). This taught me to distinguish between "make it work" and "make it right."

Finding bugs in production: I discovered our timezone handling was broken - we were appending 'Z' to ISO strings without properly converting timezones. Users in different time zones would see incorrect times. Fixing this required understanding UTC conversion, ISO 8601 formats, and how JavaScript's Date objects work across browsers.

--------------------------------------------------------------------

**Example: Learning to push back on technical suggestions**

--------------------------------------------------------------------

> Most of it makes sense but I'd like to push back on having date/time as string, that seems incredibly dangerous. Given that we can use UI to force structured input, wouldn't it make sense to store it as proper timestamp? Timezone wise it can be associated with the gathering location, not user specific. I think string for time input is bad news, so many different interpretation. If we could force date through calendar picker, and time with time picker with range, that should be better? What am I missing?

⏺ You're absolutely right! This is excellent critical thinking. String dates are a disaster waiting to happen. Let me revise.

---

Why Strings Are Dangerous

Problems you identified:

- ❌ "Sat 11 Oct" - Which year? 2025? 2026?

- ❌ "10am-12pm" vs "10:00 AM" vs "10-12" - Parsing chaos

- ❌ No timezone info - Is "10am" in Melbourne time? User's local time?

- ❌ Can't validate: Database accepts "Blurgsday 99 Octember"

- ❌ Can't query: "Find gatherings in the next 7 days" requires string parsing

- ❌ Agent gets messy data: Has to interpret "Sat" vs "Saturday" vs "2025-10-11"

You're missing nothing - this is a real problem I oversimplified! These weren't "coding wins" - I didn't write most of the code myself. But I learned to ask the right questions: What breaks if we do it this way? What's the user impact? Is this test code or production code?

On trying to understand the code

While working in tech for over a decade and building dozens of apps, I often rely on my trusty engineers for this work, and they rely on my strengths in deeply understanding users and customer problems, in designing and validating solutions with users. There's the old debate about whether PM or designers should learn to code. I was always willing and curious, but code had always been impenetrable, and didn't click with how my brain works. The cost of learning was too high, the engineers were capable, and we always managed to work something out together, based on our strengths. So I gave up on code. Until AI agents like Claude Code made it possible to patiently explain every line until I understood.

Amica's backend architecture diagram, as I understood it

It seems...irrational, even ludicrous, to spend days trying to understand the fundamental implementation details of programming, and not getting one inch further ahead in AI work. Yet it felt necessary. Most of the AI product work requires more than just writing prompts, evals, and choosing the right model, or the orchestration of agent and tool calling. To deliver the AI product experience, it involves a big chunk of fundamental architecture and software development, before the small but powerful part of AI magic.

On being in the shoes of the builder

Building the app piece-by-piece at first felt against the grain of me as a designer: from database, to different backend components (Google Places API, backend API endpoints, agent schema and logic) to wiring it up with the frontend to get the basic app working with no way to create users, or having dynamic timeslots. I built many MVPs and descoped things before, so this shouldn't feel new. Just like an architect, I knew what I wanted the end vision should look like, yet the foundation pit looked nothing like the house they had in mind!

But by building it step-by-step, I understand the flow in a much deeper way. The bricks, the plumbing make sense, even before the final touches, the windows and doors were yet in place. I remember the exact exciting moment when the backend and frontend was 'connected' successfully, even though the UI shows nothing different, I could see from the browser console that it fetched all the data related to a gathering, and we just needed to display them!

On understanding engineering trade-offs

I could see why some changes are much more straightforward than others:

The small change that requires a database migration

Mixing structured and unstructured data

Adding venueType requires a database migration (!) and touches many parts of the code, but adding a Google Maps link to the recommended venue was straightforward because the data is passed from the Google Places API response to the agent, in JSON format, and not defined in our database.

The initial create gathering form has free-text fields for cuisine preferences and dietary restrictions, but this brought me worries about input quality! Especially when such inputs are used for the venue search and agent analysis. I thought it was simple to use a common UX pattern: a structured set of cuisine and dietary options, and a catchall 'Other' where users can enter free-text. But this is a classic case of mixing structured and unstructured data (other/free text). It requires different validation and ideally two different database columns! Now I get how the user-friendly choice can be a backend headache!

On the benefits of infrastructure understanding

Installing dependency packages makes me fully appreciate the open source community, as well as the fragility and security implications. Understanding the file structure convention for code bases made it much easier to find files to fix. Having to stumble through production deployment and running into TypeScript strict mode errors, connecting database environment variables made me more aware of things that could go wrong during deployment, rather than a black box, 'the build has failed'.

My original hypothesis I wanted to test was that understanding infrastructure makes me better at AI product decisions". After the two weeks, here's what I'm thinking: Yes, but the value isn't in coding ability - it's in systems thinking.

I can now:

Evaluate technical trade-offs engineers face

Understand why "just use an agent" isn't always the answer

Appreciate the 80% of work that makes AI products production-ready

Ask better questions about architecture, not just prompts

Most importantly, I now see AI as one component in a system, not the system itself.

On applying these learnings

I built this 2 weeks ago. I haven't yet applied these learnings to a shipped AI product at scale. I don't know if my timezone bug fix would hold up in production, or if my agent framework evaluation would match what an experienced engineer would choose.

But I do know this:

I went from blind trust in autonomous agents → informed evaluation of their suggestions

I went from "the build failed" (😨 🤷🏻♀️) → investigating which error, why, what to try next 🕵🏻♀️

I went from "just use an agent" → understanding when NOT to, and why

I don't want to be an engineer who happens to work on AI products. I want to be an AI product person who understands engineering deeply enough to make better product decisions.

Two weeks of pair programming with Claude Code was humbling. It showed me how much I still don't know. But it also showed me that AI tools can lower the learning curve enough that non-engineers can build real technical intuition - not by copying code, but by slowing down and insisting on understanding why before moving to how.

Is 2 weeks enough? Definitely not. But it's a foundation I can build on.

Tech stack and reasons

Backend

TypeScript

Prisma schema for database schema

PostgreSQL database

Express for API routing

OpenAI for agent output

Vercel AI SDK & Zod schema for structured agent output

Google Places API for venue search

Resend for magic link & JWT for login session

Frontend

React for component-based architecture

TypeScript for type checking

Vite for hot reload, JSX -> JavaScript compile

Infrastructure

Railway (backend)

Vercel (frontend)

Reasons for tech choices:

TypeScript: type safety for JavaScript applications, modern tech stack, both backend and frontend, checking for errors at compile time

Prisma: schema-first design. Prisma generates a type-safe database client, with less SQL to write

PostgreSQL: relational database for multi-user coordination

Express for API routing, web framework to handle HTTP requests/ responses

CORS - Allow API to accept requests from different domains

dotenv - load environment from .env files

Vercel AI SDK: lightweight library for AI features, low learning curve, excellent - TypeScript & Zod integration for typesafe structured outputs

Zod schema validation gets runtime validation and compile-time validation (with z.infer)

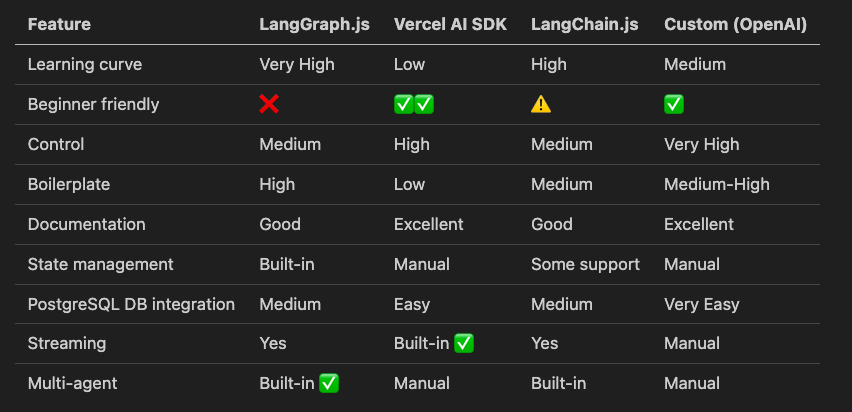

Agent framework decisions

As an initial proof-of-concept, I modified an existing crewAI template to be a meetup organiser, connected with the Google Places search API, and it ran successfully. But the downside of this Python template is that while it works on the backend with curl commands, it is not practical. I want Amica to be a web app that people can interact with in a graphical user interface.

I naively thought I could reuse Python CrewAI agents in the full-stack TypeScript app. But what a rookie error! This doesn't work at all when you have a language mismatch (Python vs JavaScript)! I asked Claude Code to consider a different agent framework, based on my specific use case and situation.

Comparison of different agent frameworks: LangGraph, Vercel AI SDK, LangChain, or Custom based on OpenAI framework

Choosing an agent framework is like choosing any technology:

Start with requirements: What's the actual workflow complexity?

Evaluate trade-offs: Learning curve vs built-in features vs control

Consider team context: Who maintains this? What's their expertise?

Beware cargo-culting: "Everyone uses LangChain" ≠ "LangChain fits my needs"

I learned that knowing when NOT to use the "industry standard" framework is as important as knowing how to use it.

For Amica specifically:

Simple sequential workflow → Custom implementation teaches more than frameworks

Multi-user state management → Database handles this, not agent framework

Production concerns (caching, error recovery) → Next iteration, possibly Vercel AI SDK

I ended up choosing Vercel AI SDK for the low learning curve, great documentation, and focus on learning structured AI outputs, and manually instrumenting agent logic.

Technical concepts I learned

Database schema design for async workflows

Multi AI agent orchestration and handoff patterns with structured outputs

Full-stack TypeScript patterns

API endpoints using Express and CORS

React patterns: callback functions, useNavigate(), useState (), useEffect()

Production deployment (Railway + Vercel)

Using git for version control, git commit, git branch, PR and merge

Using curl commands to test backend work

Configured Resend API custom domain for mailing

Using a combination of passwordless login with a 15-minute expiry token via magic link and a 7-day JWT for longer session management

Use of middleware to protect routes

Difference between nearby search (by radius) and text search in Google Places API

Adding a new column in the database, the overflow effect of schema changes, and database migration

What's next

Amica was my first attempt at multi-agent workflow and full-stack app development from scratch. It was humbling to learn so much and peel off the complexity by building such a simple app. It offers a solid foundation to learn more complex agent patterns and explore production concerns at scale.

What works now:

Two agents run sequentially: preference analysis → venue recommendation

Each agent completes fully before the next starts

Structured outputs via Zod schema validation

Google Places API integration for venue data

The current implementation taught me sequential agent workflows and structured outputs.

Here's what I'd like to explore next:

Advanced patterns I want to learn:

Parallel agent execution: Can preference analysis and venue search run simultaneously?

Cost tracking: How much does each agent workflow cost? When do we cache vs recompute?

Error recovery: What happens when the Google Places API fails? When does the agent produce invalid output?

Production concerns for AI products:

Monitoring: How do we track agent success rates, latency, and output quality?

Guardrails: How do we ensure agents don't suggest inappropriate venues or hallucinate information?

Scale: What breaks when we go from 10 to 10,000 gatherings?

These aren't just engineering questions - they're product decisions that require understanding both user needs and technical constraints. That's what I want to get better at.