Navietta

AI-Powered Layover Travel Assistant with Transparent Reasoning

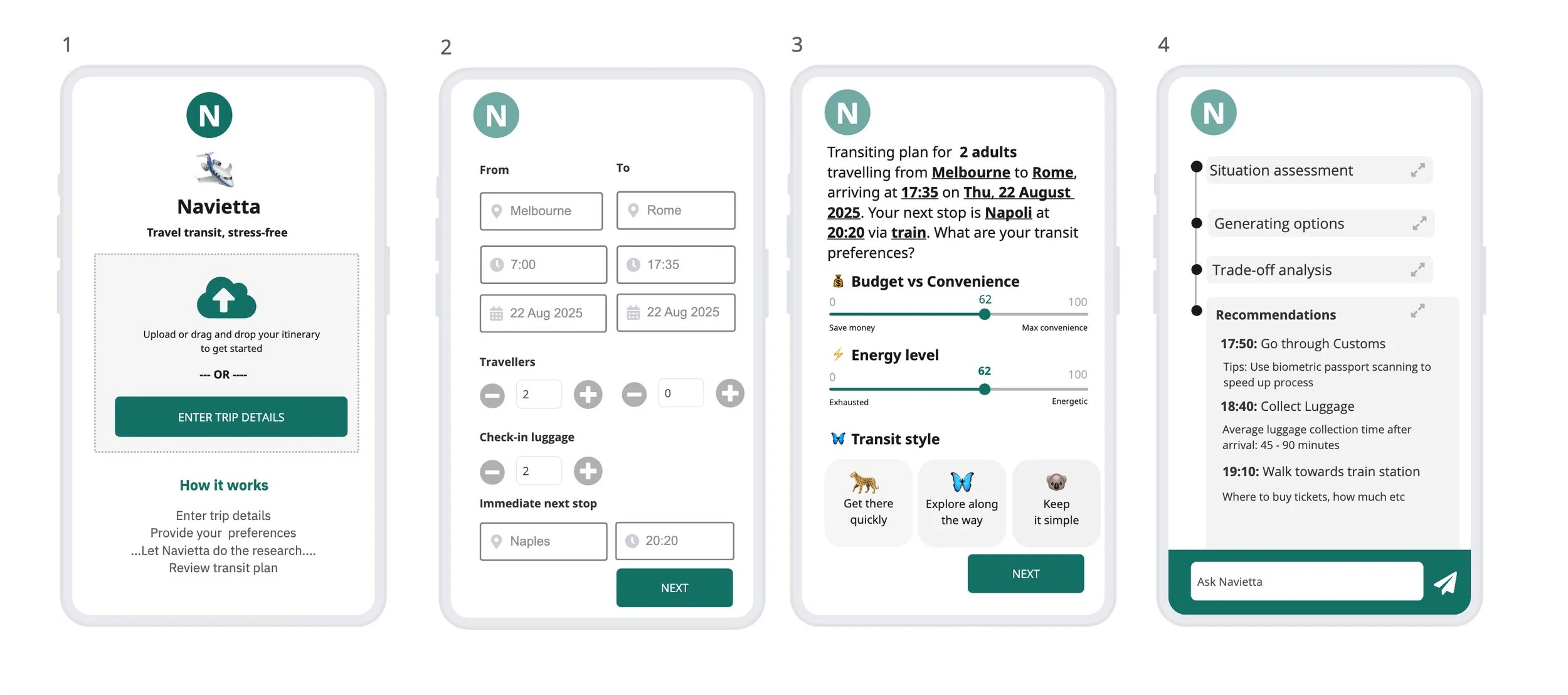

Navietta helps travellers create optimal transit plans during layovers by analysing itinerary details, preferences, and energy levels, with fully transparent AI reasoning that users can review and refine through conversational feedback.

Highlights:

Complex AI reasoning architecture with transparent system prompts and UI design

Context continuity breakthrough - solved critical technical challenge affecting user experience

Cost vs experience optimisation - managed 10x higher API costs through strategic prompt engineering

Trust patterns discovery through transparent reasoning interface testing

Summary

Navietta was my most technically complex AI prototype in my 3-week self-imposed AI Product Bootcamp, where I challenge myself to build one AI prototype per week and test with 5 real humans.

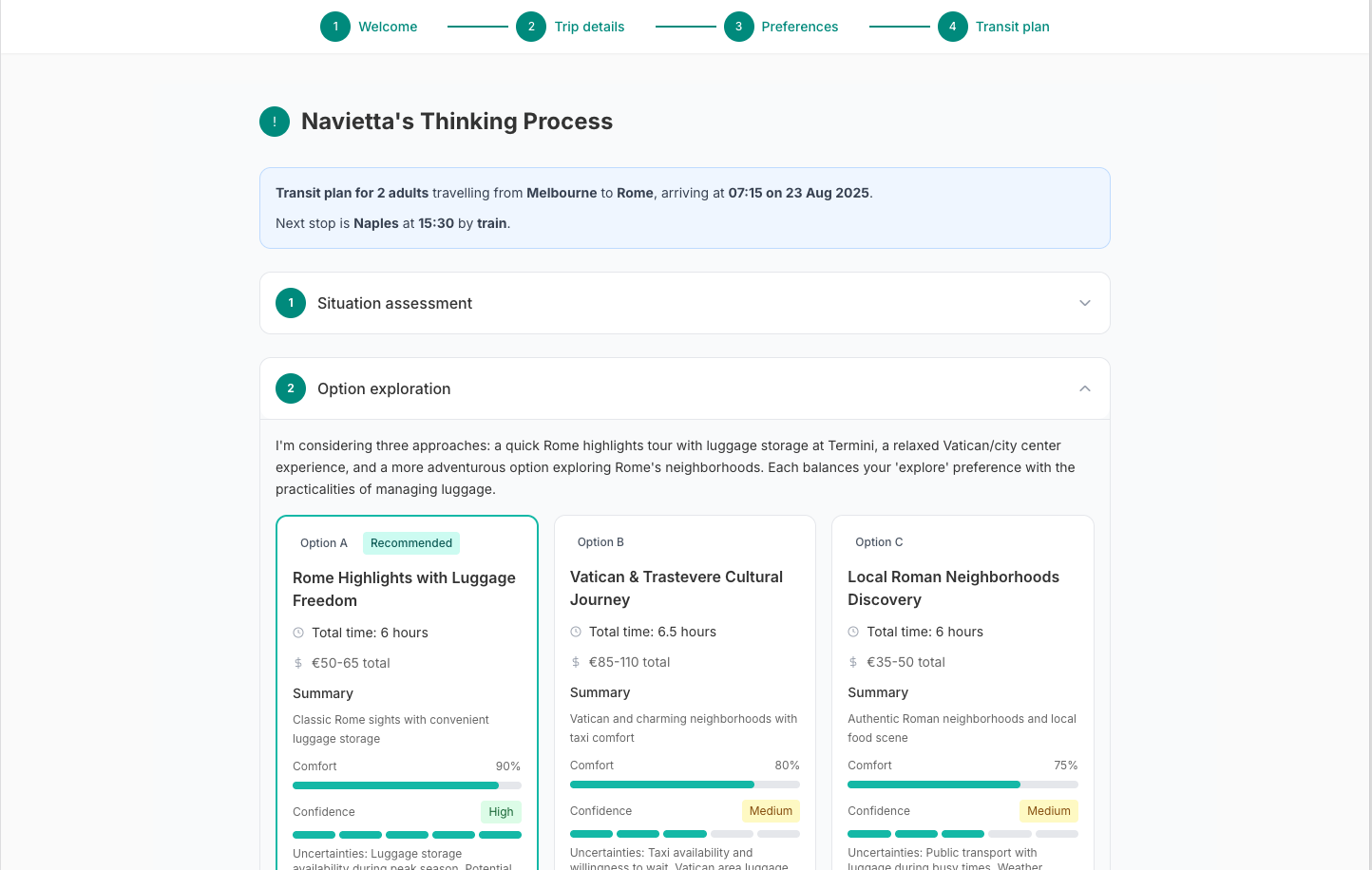

As a layover travel assistant, it takes user itinerary details and travel preferences to generate personalised transit recommendations. The key innovation? Transparent AI reasoning exposed through collapsible UI sections, plus conversational feedback to refine recommendations.

This was my first experience with complex reasoning models, context management challenges, and API cost optimisation, critical lessons for any AI PM building production-ready products.

Problem Space

Initially, I wanted to create a comprehensive travel planning tool with transparent reasoning, but soon realised that it was both too broad and a crowded market. I pivoted to focus specifically on layover travel planning after some quick user research.

Why layovers made the perfect AI reasoning test case:

• High enough stakes - people want good advice to avoid spoiling their trip

• Low effort threshold - travellers won't research layover options themselves

• Complex enough to showcase AI reasoning capabilities

• Bounded enough to avoid infinite complexity

Key insight: Everyone tend to focus on the big part of the travel plan once you get to the destination, but less so on the transit, but a bad transit experience can spoil a perfect holiday.

Technical Architecture: Transparent AI Reasoning

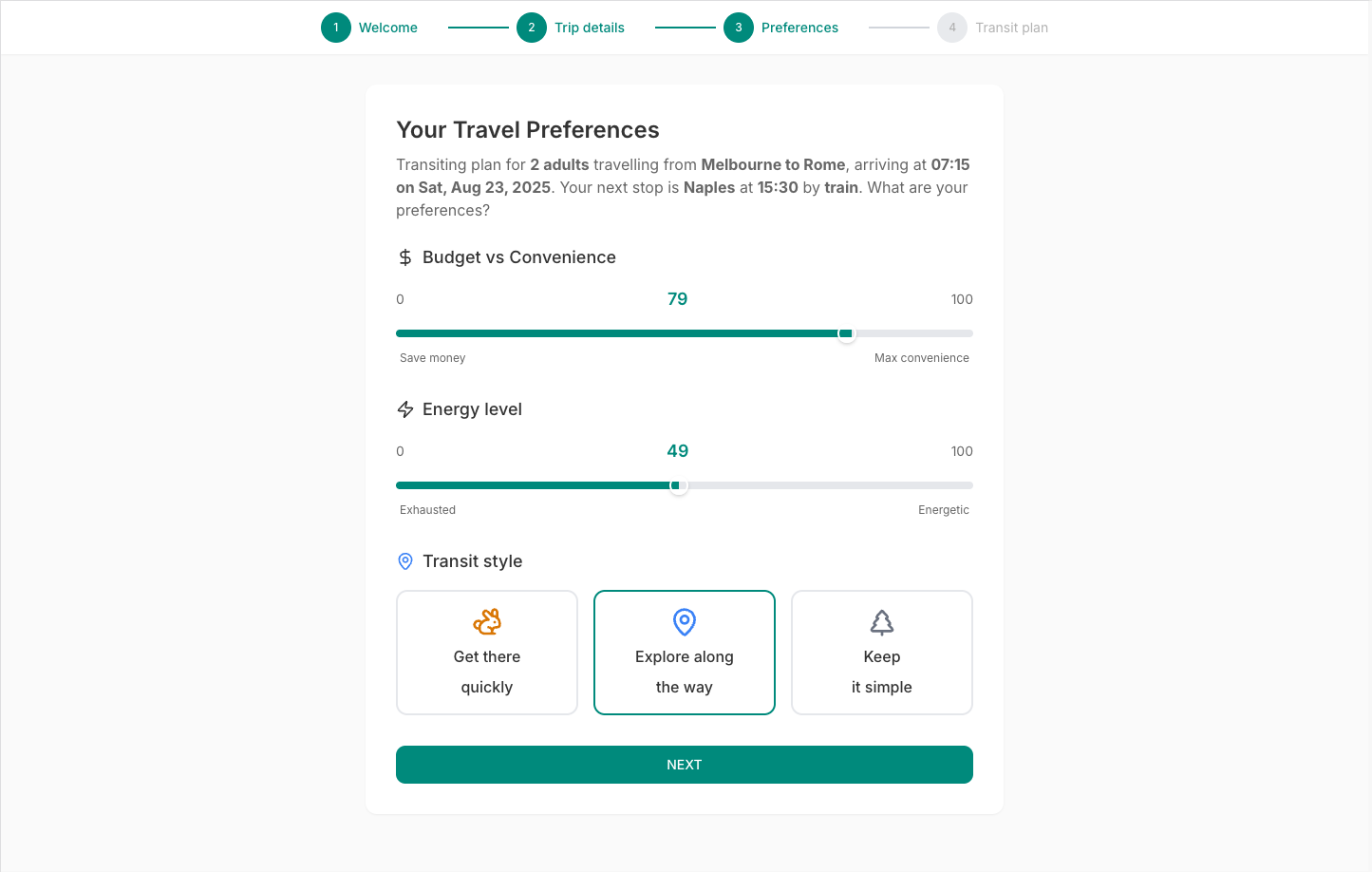

I designed the workflow with a UI form for users to entering in their trip details as structured input for the AI to consider for transit recommendation.

The system prompt pulls structured JSON input processing:

• Number of travellers (adults/children)

• Luggage details

• Transit timing and locations

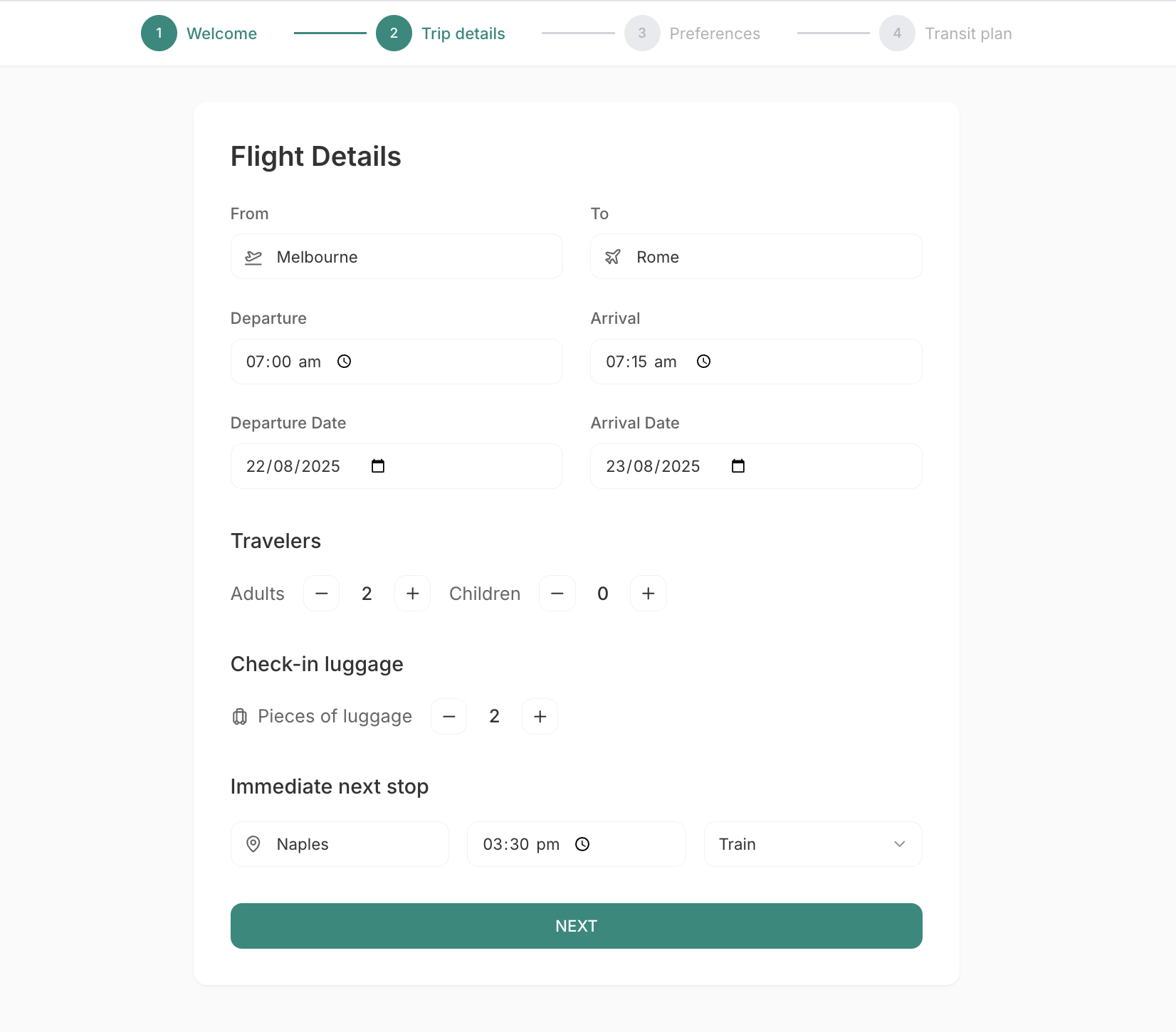

• User preferences: energy level, budget vs comfort, travel style

Pattern matching examples: I provided Italy trip scenarios for AI to generalise to other locations

UI Reasoning Framework

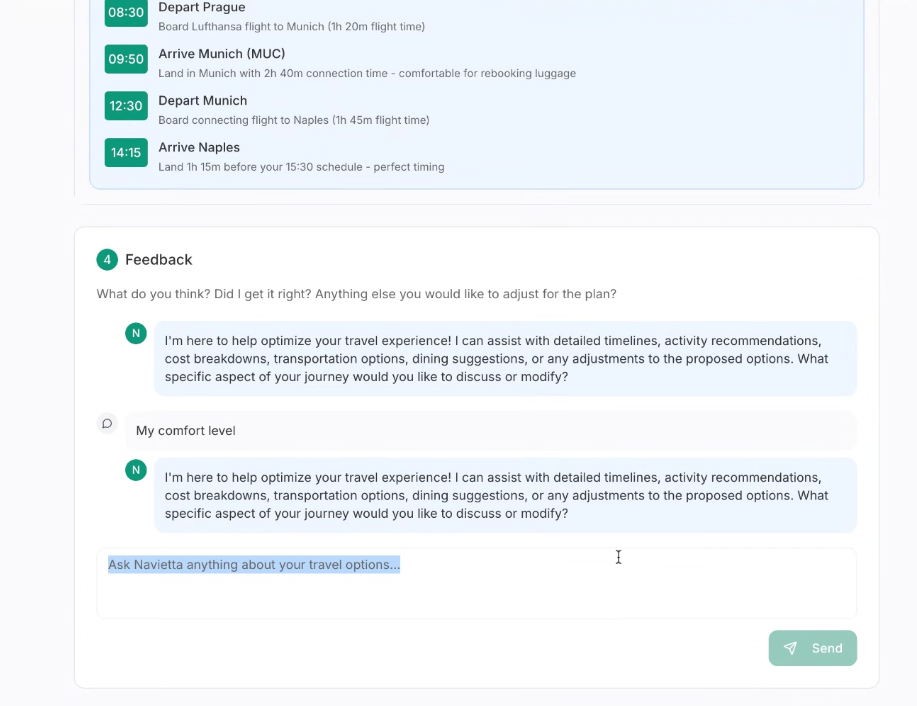

Four-step transparent reasoning exposed to users:

Situation assessment - How AI interprets travel constraints base on itinerary details

Option generation - Multiple transit options considered

Trade-off analysis - Analyse options that best suit user preferences

Final recommendation - Clear transit plan with rationale and timeline

UX decision: I decided to put the reasoning sections hidden in collapsible accordions, recommendations fully visible for quick scanning.

Critical Technical Challenge: Context Continuity

The Problem

User feedback feature completely failed - when users asked follow-up questions, the AI gave a generic response with no memory of previous recommendations.

Root Cause Analysis

I discovered the chat feedback system was in a separate file with no access to the main recommendation context.

Solution Evaluation Process

I collaborated with Replit Agent to evaluate three implementation approaches:

Option 1: Parse recommendations to feedback for context

Option 2: Unified Claude AI experience (higher cost/latency)

Option 3: Hybrid complexity-based routing

I made the decision to go with Option 2 for coherent user experience despite 10x cost increase—valuable for rich user interaction testing.

Cost vs Experience Optimisation

The Challenge

This is so far my most expensive prototype - complex reasoning models consumed 10x more tokens than previous synthesis tools.

Strategic Cost Management

Separate API keys for different prototypes and environments

System prompt optimisation - moved Rome airport knowledge from system to user prompts only when needed

Token usage tracking - monitored daily usage patterns during development vs testing phases

Result: Reduced production costs whilst maintaining premium user experience for testing phase.

User Testing Insights

I tested with 5 users using real past travel scenarios and observed the following insights from their interactions:

Transparent reasoning validation:

• 5 out of 5 users opened collapsed reasoning accordions to examine AI thinking

• Users tested different preferences to verify recommendation changes

• Higher trust when users understood the reasoning process

Accuracy threshold findings:

• Users trusted recommendations aligning with their location experience

• Trust eroded quickly when AI contradicted known facts about places

• Factual accuracy more critical than reasoning sophistication

Key insight: Users wanted both structured GUI recommendations and flexible chat refinement—validating the hybrid interface approach.

AI PM Learnings

AI as Development Partner

Replit Agent as team engineer rather than just coding tool

Claude for technical architecture discussions and trade-off analysis

Collaborative problem-solving more effective than directive approaches

Cost Structure Reality Check

Complex reasoning models: 10x cost increase compared to simple synthesis using Claude Haiku 3.5 (Sprinthesiser)

Production implications: Premium AI experiences require careful economic models

Production Readiness Considerations

API cost management critical for complex reasoning at scale

Context continuity fundamental for multi-turn interactions

Transparent reasoning builds user trust but requires careful UX design

Factual accuracy more important than reasoning sophistication for user trust

Next steps

I'm deepening my learning by refining the prototype further with:

1. User Input Optimisation

Refining trip details and preferences screen based on testing feedback:

• Addressing conflicting input scenarios (low energy + wants to explore)

• Streamlining preference selection to reduce AI model workload

• Improving default assumptions and fallback handling

2. File Processing Integration

PDF document extraction for automatic population of trip details:

• User uploads travel confirmations, boarding passes, hotel bookings

• AI extracts relevant information (flight times, locations, constraints)

• Reduces manual data entry while showcasing multimodal AI capabilities

3. Performance Optimisation

Latency and cost optimisation:

• Strategic prompt engineering to reduce token consumption

• Response time improvements for better user experience

• Production-ready cost structure analysis

An AI-powered synthesis tool that helps design sprint teams extract actionable insights from expert interviews and user testing transcripts in minutes, not hours.