Sprinthesiser

AI-powered Design Sprint Synthesis

Sprinthesiser: An AI-powered synthesis tool that helps design sprint teams extract actionable insights from expert interviews and user testing transcripts in minutes, not hours.

Highlights:

First AI product prototype, built in 3 days, tested with 6 users

Model selection, AI API integration, system prompt adjustments for reliability

Summary

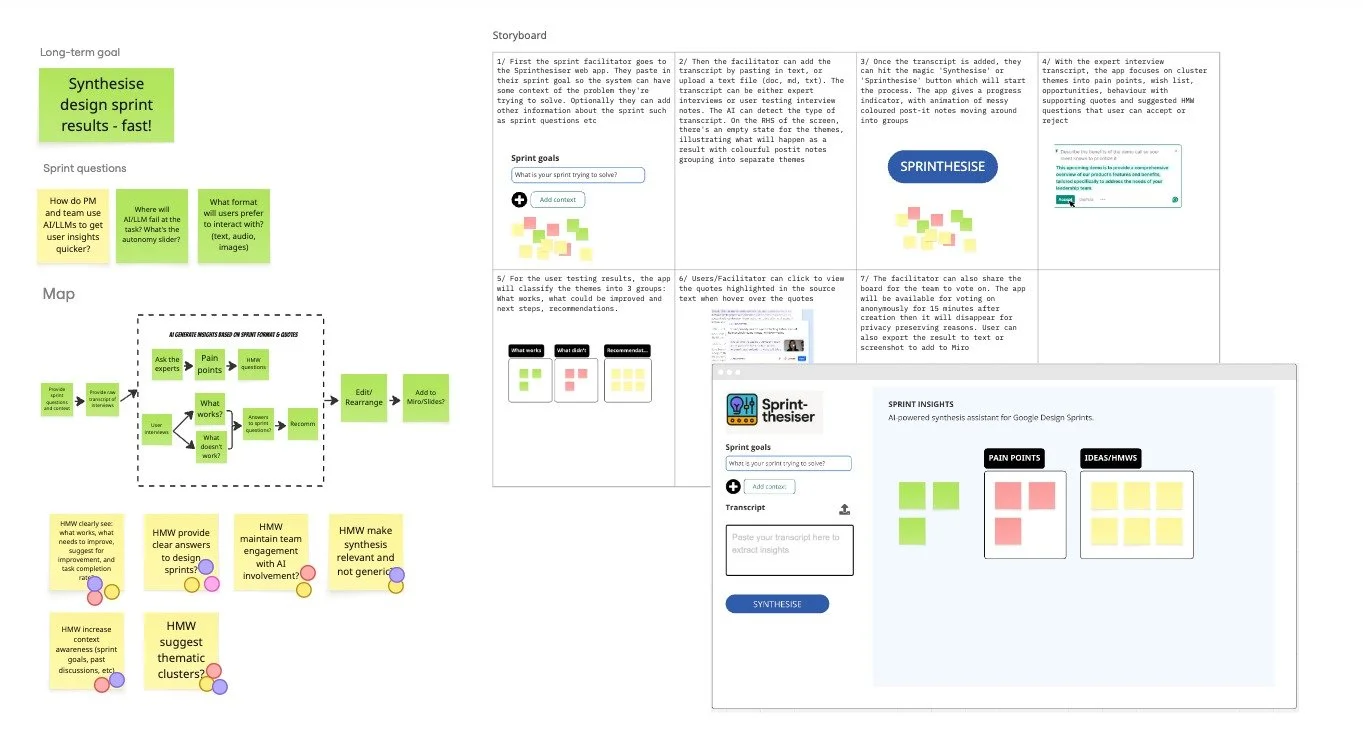

Sprinthesiser was the very first AI product prototype in my 3-week self-imposed AI Product Bootcamp, where each week, I challenge myself to build one AI prototype, and test with 5 real humans.

As the name suggests, it’s an AI-powered synthesis tool helping product team using the GV 5-day design sprint framework to get to their insights faster, especially for distilling insights from Expert Interviews on Day 2, and User Testing Notes on Day 5.

This was my first time hooking up a real AI API and experiencing AI trust and reliability issues firsthand.

Problem & Pivot

Initially, I planned a general user research synthesis tool. But I quickly realized this market is saturated—people already use ChatGPT or tools like Dovetail for transcript analysis.

The pivot: Focus specifically on GV Design Sprint methodology. This made sense because:

Design sprints are separate from continuous delivery cycles

The 5-day process is intense and packed—AI synthesis would be invaluable

Design sprint terminology is distinct (expert interviews, HMW questions), giving AI better context

Process & Prototyping

I followed the design sprint process: sprint goal, interviews (including a mock persona played by ChatGPT), sketching, storyboarding, then fed everything into a PRD for Replit.

Build approach:

Core functionality with mock data first

AI summarization with structured outputs

Real API integration

Additional features (voting, export)

UI refinement last

AI Considerations

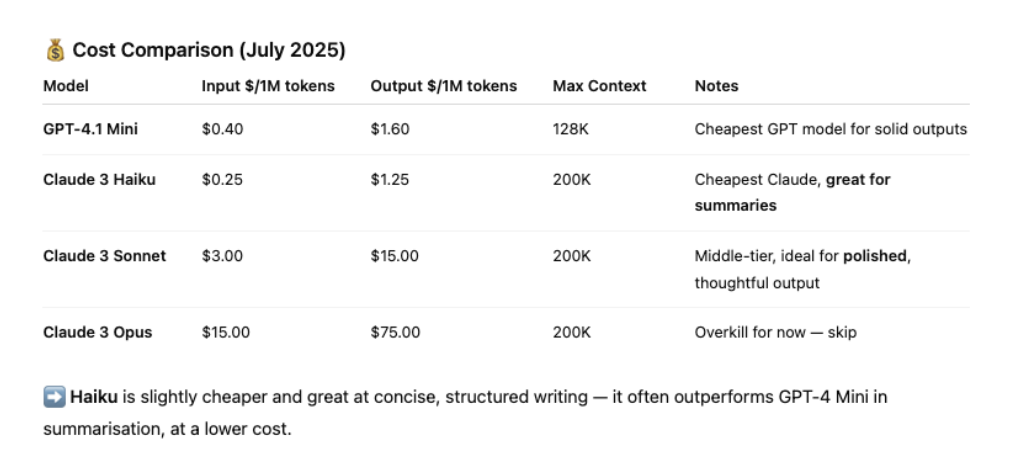

Model Selection: I systematically evaluated models based on use case, cost, and quality thresholds. I chose Claude Haiku for its summarisation strength, cost-effectiveness, and 200K context window (vs GPT-4 Mini's 128K).

Prompt Engineering: Designed role-based system prompts embedding Design Sprint methodology directly into AI instructions, ensuring outputs matched the framework (HMW questions, categorized insights).

Consistency: Set temperature to 0 for reliable insight generation over creativity—achieved ~80% consistency in JSON structure during testing.

The magic moment: When I first connected the Claude API and saw real data flowing into structured insights—quotes, sources, pain points all organized perfectly. My first taste of delivering an AI-powered product experience.

Testing & insights

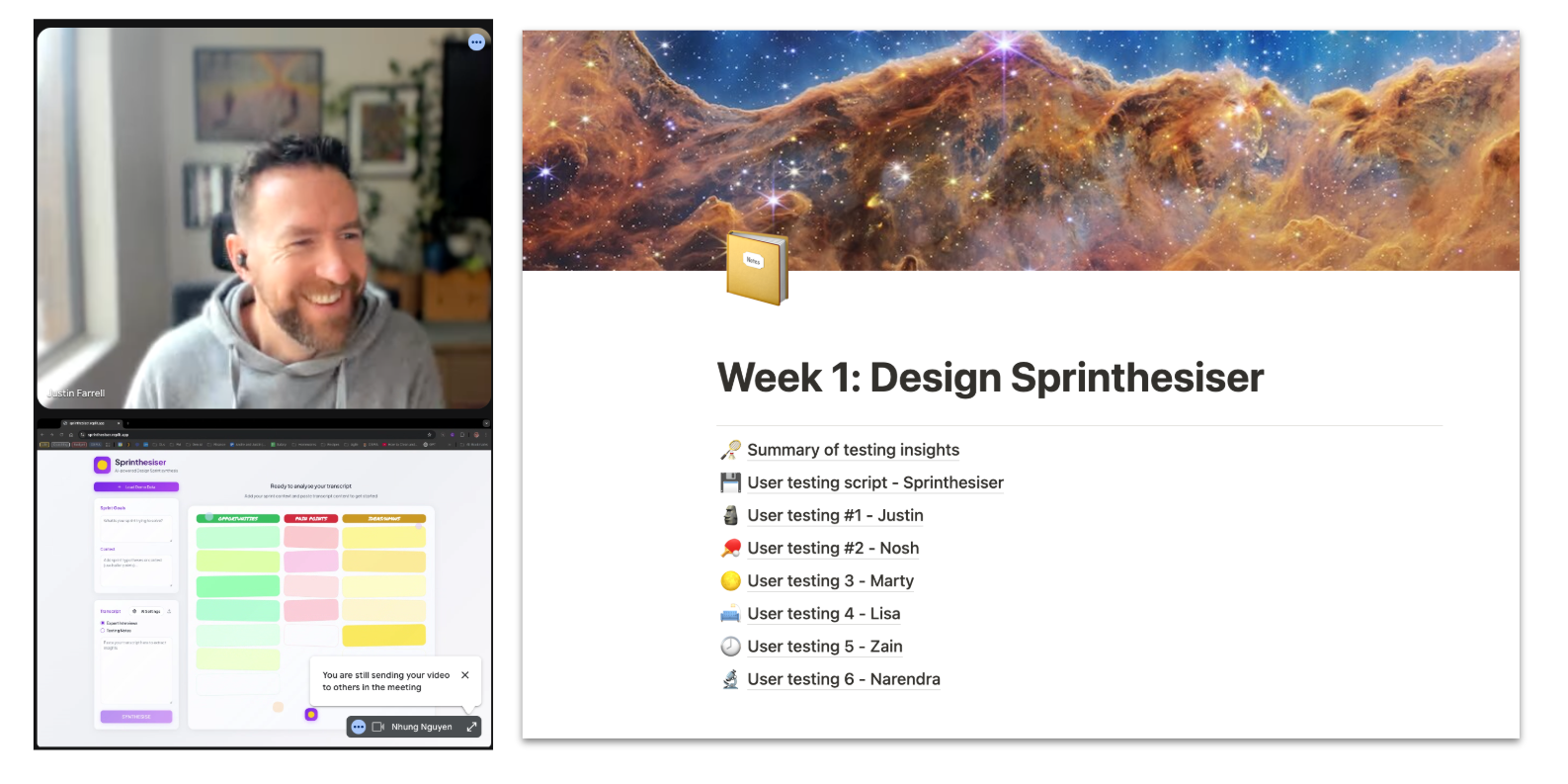

Tested with 6 PMs and designers via 1:1 video calls. I deliberately took handwritten notes (no AI transcription) to force attention and pattern recognition.

Product insights:

Audience fit: Much stronger appeal for people experienced with GV Design Sprint vs. those using variations

GUI vs. Chatbot value: Visual presentation plus user control (editing prompts, rejecting suggestions) provided value over pure chat interfaces

Reliability challenge: AI's non-deterministic nature means same transcript can generate different insights each run—potentially a feature, not bug, if provenance is clear

Process insights:

Embrace imperfection and test early

Narrow focus but recruit the right audience

Constrain scope ruthlessly to test core hypothesis

Conclusion

Sprinthesiser gave me a strong foundational knowledge of what it’s like to create AI-powered products: from API integration to prompt engineering to user testing AI experiences. This confidence in 'learning by doing' enabled me to tackle more complex challenges in my next prototypes: real-time data integration, multimodal interfaces, and advanced reasoning models.

An AI-powered synthesis tool that helps design sprint teams extract actionable insights from expert interviews and user testing transcripts in minutes, not hours.